5 Use Cases for DynamoDB

Introduction

Web-based applications face scaling due to the growth of users along with the increasing complexity of data traffic.

Along with the modern complexity of business comes the need to process data faster and more robustly. Because of this, standard transactional databases aren’t always the best fit.

Instead, databases such as DynamoDB have been designed to manage the new influx of data. DynamoDB is an Amazon Web Services database system that supports data structures and key-valued cloud services. It allows users the benefit of auto-scaling, in-memory caching, backup and restore options for all their internet-scale applications using DynamoDB.

But why would you want to use DynamoDB and what are some examples of use cases?

In this post, we’ll cover just that. We will layout the benefits of using DynamoDB, and outline some use cases as well as some of the challenges.

Benefits of DynamoDB for Operations

First, let’s discuss why DynamoDB can be useful.

Performance and scalability

Those who have worked in the IT industry know that scaling databases can both be difficult and risky. DynamoDB gives you the ability to auto-scale by tracking how close your usage is to the upper bounds. This can allow your system to adjust according to the amount of data traffic, helping you to avoid issues with performance while reducing costs.

Access to control rules

As data gets more specific and personal, it becomes more important to have effective access control. You want to easily apply access control to the right people without creating bottlenecks in other people’s workflow. The fine-grained access control of DynamoDB allows the table owner to gain a higher level of control over the data in the table.

Persistence of event stream data

DynamoDB streams allow developers to receive and update item-level data before and after changes in that data. This is because DynamoDB streams provide a time-ordered sequence of changes made to the data within the last 24 hours. With streams, you can easily use the API to make changes to a full-text search data store such as Elasticsearch, push incremental backups to Amazon S3, or maintain an up-to-date read-cache.

Time To Live

TTL or Time-to-Live is a process that allows you to set timestamps for deleting expired data from your tables. As soon as the timestamp expires, the data that is marked to expire is then deleted from the table. Through this functionality, developers can keep track of expired data and delete it automatically. This process also helps in reducing storage and cutting the costs of manual data deletion work.

Storage of inconsistent schema items

If your data objects are required to be stored in inconsistent schemas, DynamoDB can manage that. Since DynamoDB is a NoSQL data model, it handles less structured data more efficiently than a relational data model, which is why it’s easier to address query volumes and offers high performance queries for item storage in inconsistent schemas.

Automatic data management

DynamoDB constantly creates a backup of your data for safety purposes which allows owners to have data saved on the cloud.

5 Use Cases for DynamoDB

One of the reasons people don’t use DynamoDB is because they are uncertain whether it is a good fit for their project. We wanted to share some examples where companies are using DynamoDB to help manage the larger influx and of data at high speeds.

Duolingo

Duolingo, an online learning site, uses DynamoDB to store approximately 31 billion data objects on their web server.

This startup has around 18 million monthly users who perform around six billion exercises using the Duolingo app.

Because their application has 24,000 read units per second and 3,300 write units per second DynamoDB ended up being the right fit for them. The team had very little knowledge about DevOps and managing large scale systems when they started. Because of Duolingo’s global usage and need for personalized data, DynamoDB is the only database that has been able to meet their needs, both in terms of data storage and DevOps.

Also, the fact that DynamoDB scales automatically meant that this small startup did not need to use their developers to manually adjust the size. DynamoDB has simplified as well as scaled to meet their needs.

Major League Baseball (MLB)

There’s a lot we take for granted when we watch a game of baseball.

For example, did you know there’s a Doppler radar system that sits behind home plate, sampling the ball position 2,000 times a second? Or that there are two stereoscopic imaging devices, usually positioned above the third-base line, that sample the positions of players on the field 30 times a second?

All these data transactions require a system that is fast on both reads and writes. The MLB uses a combination of AWS components to help process all this data. DynamoDB plays a key role in ensuring queries are fast and reliable.

Hess Corporation

Hess Corporation, a well-known energy company, has been working on the exploration and production of natural gas and crude oil.

This business requires strategizing different financial planning which impacts management on the whole. To streamline their business processes, Hess turned towards DynamoDB by shifting its E&P (Energy Exploration and Production) project onto AWS.

Now DynamoDB has helped the company in separating potential buyers’ data from business systems. Moreover, the operational infrastructure of DynamoDB helps them to handle data effectively and get optimized and well-managed results.

GE Healthcare

GE is well-known for medical imaging equipment that helps in diagnostics through radiopharmaceuticals and imaging agents.

The company has used DynamoDB to increase customer value, enabled by cloud access, storage, and computation.

The GE Health Cloud provides a single portal for healthcare professionals all over the US to process and share images of patient cases. This is a great advantage for diagnostics. Clinicians can improve treatments through access to this healthcare data,

Docomo

NTT Docomo, a popular mobile phone operating company, has built a reputation for its voice recognition services, which need the best performance and capacity.

To cater to these requirements, Docomo turned towards DynamoDB which has helped the company scale towards better performance.

With their growing customer base, Docomo has brought a voice recognition architecture into use, which helps them perform better even during traffic spikes.

Along with all these cases, advertising technology companies also rely heavily on Amazon DynamoDB to store their marketing data of different types.

This data includes user events, user profiles, visited links and clicks. Sometimes, this data also includes ad targeting, attribution, and real-time bidding.

Thus, ad tech companies require low latency, high request rate and high performance without having to invest heavily in database operations.

This is why companies turn towards DynamoDB. It not only offers high performance but also, with its data replication option, allows companies to deploy their real-time applications in more than one geographical location.

However, despite all the benefits DynamoDB isn’t always the easiest database to use when it comes to analytics.

Challenges of Analyzing DynamoDB Operational Data

DynamoDB’s focus is on providing fast data transactions for applications. What makes DynamoDB fast on a transaction level can actually hinder it from the perspective of analyzing data. Here are a few of the major roadblocks you will run into once you start analyzing data in DynamoDB.

Online Analytical Processing (OLAP)

Online analytical processing and data warehousing systems usually require huge amounts of aggregating, as well as the joining of dimensional tables, which are provided in a normalized or relational view of data.

This is not possible in the case of DynamoDB since it’s a non-relational database that works better with NoSQL formatted data tables. Besides, the general data structures for analytics aren’t always well supported in key-value databases. In turn, it can be harder to get to data and run large computations.

Querying and SQL

Along with OLAP processes being difficult to run on DynamoDB, due to the focus of DynamoDB being operational, DynamoDB does not interface with SQL.

This is a key issue because most analytical talent is familiar with SQL and not DynamoDB queries. In turn, this makes it difficult to interact with the data and ask critical analytical questions.

The consequences of this can be the requirement to hire developers solely to extract the data, which is expensive, or being unable to analyze the data at all.

Indexing is expensive

Another qualm when it comes to analytics is processing large data sets quickly. Often this can be alleviated with indexes.

In this case, the problem is that DynamoDB’s global secondary indexes require additional read and write capacity provisioned, leading to additional cost. This means that either your queries will run slower or you will incur greater costs.

These challenges can sometimes be a hindrance to some companies deciding whether or not they want to take on the risk of developing on DynamoDB.

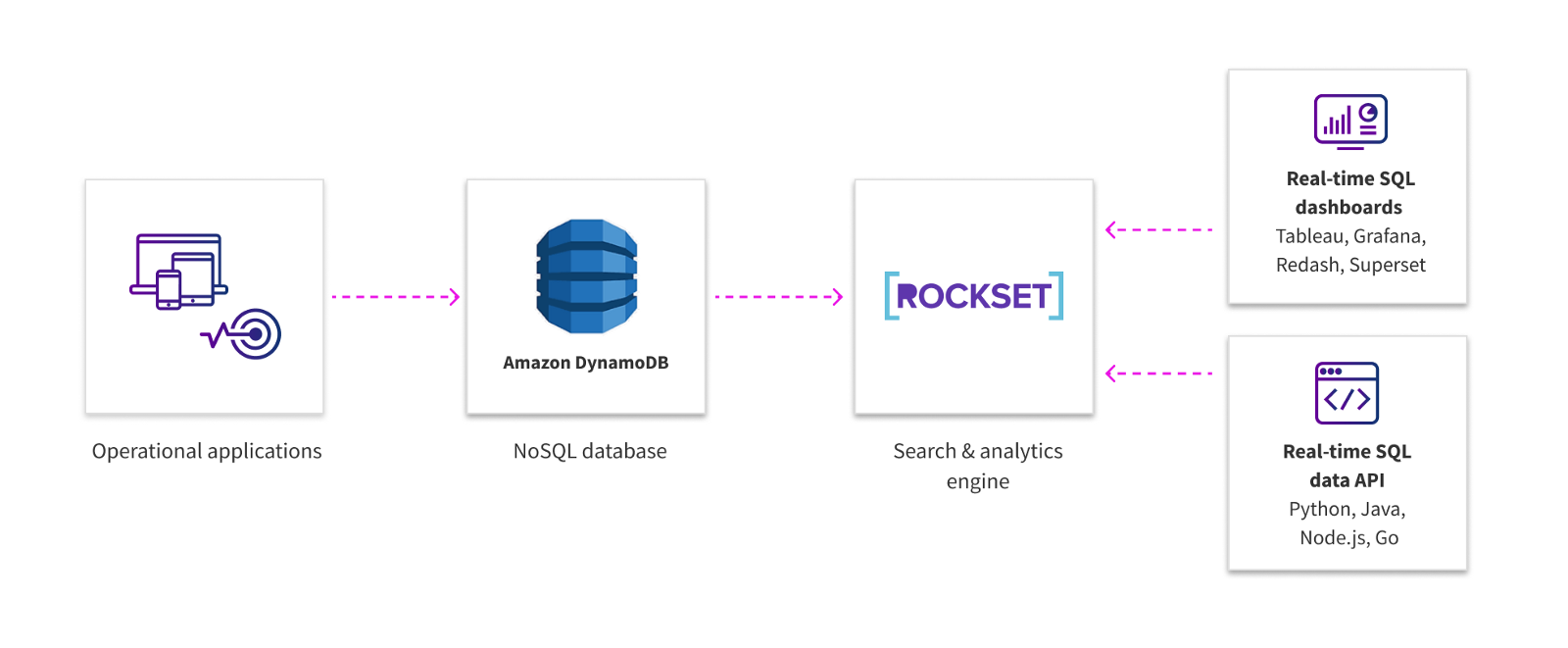

This is where analytics engines like Rockset come in. They not only provide a SQL layer that makes it easy to access the operational data but also provide the ability to ingest the data into their data layer — which supports joins with data from other AWS data sources, like Redshift and S3.

We’ve found tools like this to be helpful because they can reduce the need for developers and data engineers who can interface with DynamoDB. Instead, you can do your analytical work and answer the important questions without being held back.

For those who have ever worked on an analytical team, it can be very difficult to explain to upper management why data exists, but you’re still unable to provide insights. Using layers like Rockset can take the complexity away.

Conclusion

As a non-relational database, DynamoDB is a reliable system that helps small, medium and large enterprises scale their applications.

It comes with options to backup, restore and secure data, and is great for both mobile and web apps. With the exception of special services like financial transactions and healthcare, you can redesign almost any application with DynamoDB.

This non-relational database is extremely convenient to build event-driven architecture and user-friendly applications. Any shortcomings with analytic workloads are easily rectified with the use of an analytic-focused SQL layer, making DynamoDB a great asset for users.

Also, if you are looking to read/watch more great posts or videos:

What is Predictive Modeling

Hadoop Vs Relational Databases

How Algorithms Can Become Unethical and Biased

How To Improve Your Data Driven Strategy

How To Develop Robust Algorithms

4 Must Have Skills For Data Scientists

SQL Best Practices — Designing An ETL Video